The ease with which search engine crawlers may access and index a website’s information is known as website crawlability. A website needs to be well-structured, with accessible URLs and clearly defined internal links, in order to be as crawlable as possible. Better crawlability is influenced by elements such as mobile friendliness, clean code, and quick page loads. Search engine bots can be effectively guided with the help of XML sitemaps and robots.txt files. Avoiding duplicate material and repairing broken links also aid in the accurate indexation of pages by search engines. Enhancing a site’s crawlability makes it more visible to search engines and guarantees that all relevant information is found and rated correctly.

website Crawlability Checker

Crawlability checkers are tools that analyze a website’s structure and identify issues that may hinder search engine bots from scanning and indexing its pages. Popular tools include Google Search Console, Screaming Frog SEO Spider, and Ahrefs Site Audit. These tools highlight problems like slow loading speeds, broken links, missing sitemaps, and inaccessible content. By identifying crawlability issues, website owners can increase their sites’ exposure to search engines and ensure that important pages are properly indexed.

Website crawlability example

Search engine bots may easily navigate and index content from a well-structured blog with internal links in every article. This is an illustration of a crawlable webpage. The website has an XML sitemap that lists all of the important pages as well as a fully-configured robots.txt file to guide search engines. Fast loading times and mobile optimization further improve crawlability. When URLs are well-written and free of broken links, search engines can access and crawl all important pages, increasing search visibility.

How To Check If a Website Is Crawlable

Start by viewing crawl faults and indexing difficulties in Google Search Console to determine whether a page is crawlable. Screaming Frog SEO Spider is one tool that may crawl your website and find obstacles like unavailable pages, broken links, or missing sitemaps. To guarantee ideal crawlability, use tools like PageSpeed Insights to assess a page’s mobile friendliness and load speed. By following these methods, you may make your website more search engine friendly.

How do I know If My Website Is Crawlable?

First, look for crawl failures or indexing problems in Google Search Console to see if your website can be crawled. Next, check your website using tools like Screaming Frog SEO Spider to find any possible issues, such broken links or unavailable pages. Additionally, check your XML sitemap and robots.txt file to make sure everything is configured correctly. Additionally, use tools like PageSpeed Insights to assess the site’s mobile friendliness and loading speed. You can improve search engine visibility by identifying and resolving crawlability issues.

What Is Crawlability Of a Website?

The ease with which search engine bots may access and explore a website’s pages for indexing is known as its crawlability. First off, crawlability is improved by a well-structured website with internal links, unambiguous URLs, and appropriate sitemaps. Better crawling is also facilitated by mobile friendliness and optimized page speeds. The next important step is to make sure that no blocking elements, such as robots.txt files, are preventing bot access. Last but not least, fixing problems like broken links and duplicate material enhances crawlability, which aids search engines in indexing pertinent pages and raising ranks. Improved website exposure in search results is guaranteed by effective crawlability.

How can you identify if a user on your site is a web crawler?

First, look for the user-agent string in server logs to determine whether a user on your website is a web crawler. Typical user-agent names for web crawlers include “Googlebot” or “Bingbot.” Next, keep an eye on user activity, including frequent, quick requests to several pages, which are common for crawlers. Robots.txt can also be used to restrict access and stop undesirable crawlers. Lastly, further information about crawler activity on your website can be obtained through tools such as server log analysis and Google Search Console.

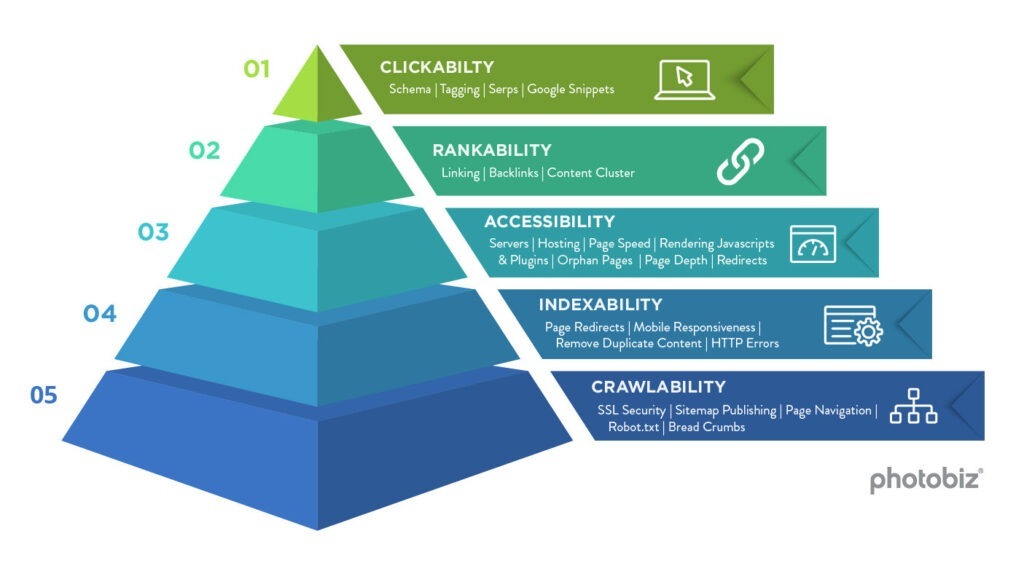

How to Improve Website Crawlability And Indexability

Make sure your website is well-structured, with intuitive navigation and internal links, before attempting to boost crawlability and indexability. After that, make an XML sitemap and publish it to direct search engines to all of the key pages. Additionally, make sure your robots.txt file is optimized so that bots may crawl the appropriate pages. For improved crawling, make sure your pages load quickly and are optimized for mobile devices. Lastly, to help search engines properly index your website and increase visibility and ranks, restore broken links and remove duplicate content.

How To Make a Website Crawlable

A website must initially have a clear, well-structured layout with internal links and easy navigation in order to be crawlable. To assist search engines in locating all crucial pages, prepare and submit an XML sitemap next. Make sure the robots.txt file is set up properly so search engines may reach important pages as well. To improve crawl efficiency, make your site mobile-friendly and optimize page speed. Finally, make sure there are no obstacles that could impede search engine bots, correct broken links, and eliminate duplicate content.